All Tags

AWS

ai

algorithm-design

architecture

browser

cloud

cloud-efficiency

cloud-principles

cost-reduction

data-centric

data-compression

data-processing

deployment

design

documentation

edge-computing

email-sharing

energy-efficiency

energy-footprint

enterprise-optimization

green-ai

hardware

libraries

llm

locality

machine-learning

maintainability

management

measured

microservices

migration

mobile

model-optimization

model-training

multi-objective

network-traffic

parameter-tuning

performance

queries

rebuilding

scaling

services

storage-optimization

strategies

tabs

template

testing

workloads

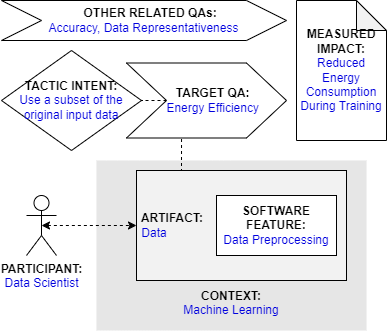

Tactic: Apply Sampling Techniques

Tactic sort:

Awesome Tactic

Type: Architectural Tactic

Category: green-ml-enabled-systems

Title

Apply Sampling Techniques

Description

The size of input data has a positive correlation with the energy consumption of computing. Therefore, reducing the size of input data can have a positive impact on energy-efficiency of machine learning. Reducing input data can be done by using only a subset of the original input data. This is called sampling. There are different ways of conducting sampling (e.g., simple random sampling, systematic sampling), As an example, Verdecchia et al. (2022) used stratified sampling, which means randomly selecting data points from homogeneous subgroups of the original dataset.

Participant

Data Scientist

Related software artifact

Data

Context

Machine Learning

Software feature

Data Preprocessing

Tactic intent

Enhance energy efficiency by using a subset of the original input data for training and inference

Target quality attribute

Energy Efficiency

Other related quality attributes

Accuracy, Data Representativeness

Measured impact

Sampling can lead to savings in energy consumption during model training with only negligible reductions in accuracy. Verdecchia et al (2022) achieved decrease in energy consumption of up to 92%

Source

Verdecchia, R., Cruz, L., Sallou, J., Lin, M., Wickenden, J., & Hotellier, E. (2022, June). Data-centric green ai an exploratory empirical study. In 2022 International Conference on ICT for Sustainability (ICT4S) (pp. 35-45). IEEE.; Yue Wang, Ziyu Jiang, Xiaohan Chen, Pengfei Xu, Yang Zhao, Yingyan Lin, and Zhangyang Wang. 2019. E2-Train: Training State-of-the-art CNNs with Over 80% Energy Savings. In Advances in Neural Information Processing Systems, H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett (Eds.), Vol. 32. Curran Associates, Inc. (DOI: https://doi.org/10.1109/ICT4S55073.2022.00015)Graphical representation