All Tags

AWS

ai

algorithm-design

architecture

browser

cloud

cloud-efficiency

cloud-principles

cost-reduction

data-centric

data-compression

data-processing

deployment

design

documentation

edge-computing

email-sharing

energy-efficiency

energy-footprint

enterprise-optimization

green-ai

hardware

libraries

llm

locality

machine-learning

maintainability

management

measured

microservices

migration

mobile

model-optimization

model-training

multi-objective

network-traffic

parameter-tuning

performance

queries

rebuilding

scaling

services

storage-optimization

strategies

tabs

template

testing

workloads

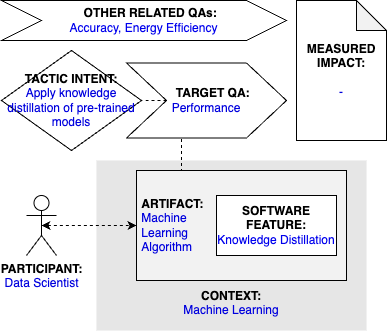

Tactic: Consider Knowledge Distillation

Tactic sort:

Awesome Tactic

Type: Architectural Tactic

Category: green-ml-enabled-systems

Title

Consider Knowledge Distillation

Description

Knowledge distillation is a technique where a large, complex model (teacher) is used to train a smaller, simpler model (student). The goal is to transfer the learned information from the teacher model to the student model, allowing the student model to achieve comparable performance while requiring fewer computational resources. Knowledge distillation improves performance when evaluating accuracy and energy consumption.

Participant

Data Scientist

Related software artifact

Machine Learning Algorithm

Context

Machine Learning

Software feature

Knowledge Distillation

Tactic intent

Improve energy efficiency by apply knowledge distillation of pre-trained models if they are too big for a given task

Target quality attribute

Performance

Other related quality attributes

Accuracy, Energy Efficiency

Measured impact

< unknown >

Source

Shanbhag, S., Chimalakonda, S., Sharma, V. S., & Kaulgud, V. (2022, June). Towards a Catalog of Energy Patterns in Deep Learning Development. In Proceedings of the International Conference on Evaluation and Assessment in Software Engineering 2022 (pp. 150-159). [DOI](https://doi.org/10.1145/3530019.3530035); Haichuan Yang, Yuhao Zhu, and Ji Liu. 2019. Energy-Constrained Compression for Deep Neural Networks Via Weighted Sparse Projection and Layer Input Masking. International Conference on Learning Representations (ICLR) (2019) (ICDCSW). 55–62. [DOI](https://doi.org/10.48550/arXiv.1806.04321)Graphical representation