All Tags

AWS

ai

algorithm-design

architecture

browser

cloud

cloud-efficiency

cloud-principles

cost-reduction

data-centric

data-compression

data-processing

deployment

design

documentation

edge-computing

email-sharing

energy-efficiency

energy-footprint

enterprise-optimization

green-ai

hardware

libraries

llm

locality

machine-learning

maintainability

management

measured

microservices

migration

mobile

model-optimization

model-training

multi-objective

network-traffic

parameter-tuning

performance

queries

rebuilding

scaling

services

storage-optimization

strategies

tabs

template

testing

workloads

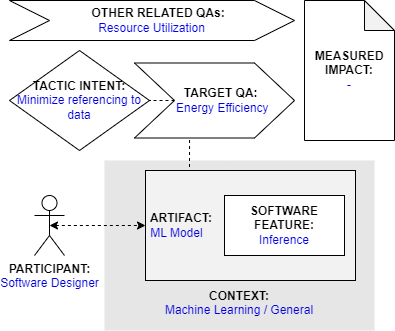

Tactic: Minimize Referencing to Data

Tactic sort:

Awesome Tactic

Type: Architectural Tactic

Category: green-ml-enabled-systems

Title

Minimize Referencing to Data

Description

Machine learning models require reading and writing enormous amounts of data in the ML workflow. Reading data means retrieving information from storage, while writing data means storing or updating the information. These operations may increase unnecessary data movements and memory usage, which influence the energy consumption of computing. To avoid non-essential referencing of data, reading and writing operations must be designed carefully.

Participant

Software Designer

Related software artifact

ML Model

Context

Machine Learning, General

Software feature

Inference

Tactic intent

Improve energy efficiency by avoiding unnecessary data read/write operations

Target quality attribute

Energy Efficiency

Other related quality attributes

Resource Utilization

Measured impact

< unknown >

Source

Shanbhag, S., Chimalakonda, S., Sharma, V. S., & Kaulgud, V. (2022, June). Shriram Shanbhag, Sridhar Chimalakonda, Vibhu Saujanya Sharma, and Vikrant Kaulgud. 2022. Towards a Catalog of Energy Patterns in Deep Learning Development. In Proceedings of the International Conference on Evaluation and Assessment in Software Engineering 2022. 150–159. (DOI: https://doi.org/10.1145/3530019.3530035)Graphical representation