All Tags

AWS

ai

algorithm-design

architecture

browser

cloud

cloud-efficiency

cloud-principles

cost-reduction

data-centric

data-compression

data-processing

deployment

design

documentation

edge-computing

email-sharing

energy-efficiency

energy-footprint

enterprise-optimization

green-ai

hardware

libraries

llm

locality

machine-learning

maintainability

management

measured

microservices

migration

mobile

model-optimization

model-training

multi-objective

network-traffic

parameter-tuning

performance

queries

rebuilding

scaling

services

storage-optimization

strategies

tabs

template

testing

workloads

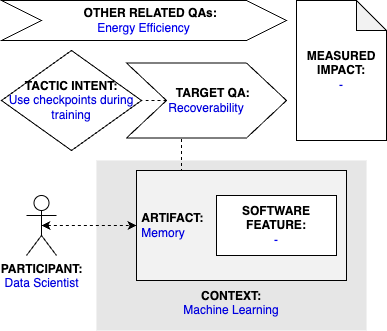

Tactic: Use Checkpoints During Training

Tactic sort:

Awesome Tactic

Type: Architectural Tactic

Category: green-ml-enabled-systems

Title

Use Checkpoints During Training

Description

Training is an energy-intensive stage of the machine learning life cycle, which may take long periods of time. Sometimes a failure or hardware error can terminate the training process before it is completed. In those cases, the training process must be started from the beginning. The use of checkpoints however can save the trained model in regular intervals and in case of a premature termination, the training process can continue at the last checkpoint (Shanbhag et al., 2022). Using checkpoints during training improves the robustness of a ML system.

Participant

Data Scientist

Related software artifact

Memory

Context

Machine Learning

Software feature

< unknown >

Tactic intent

Improve energy efficiency by using checkpoints during training to prevent knowledge loss due to a premature termination, which would in turn require to restart the process from the beginning, therefore increasing energy consumption.

Target quality attribute

Recoverability

Other related quality attributes

Energy Efficiency

Measured impact

< unknown >

Source

Shriram Shanbhag, Sridhar Chimalakonda, Vibhu Saujanya Sharma, and Vikrant Kaulgud. 2022. Towards a Catalog of Energy Patterns in Deep Learning Development. In Proceedings of the International Conference on Evaluation and Assessment in Software Engineering 2022. 150–159. (DOI: https://doi.org/10.1145/3530019.3530035)Graphical representation