All Tags

AWS

ai

algorithm-design

architecture

browser

cloud

cloud-efficiency

cloud-principles

cost-reduction

data-centric

data-compression

data-processing

deployment

design

documentation

edge-computing

email-sharing

energy-efficiency

energy-footprint

enterprise-optimization

green-ai

hardware

libraries

llm

locality

machine-learning

maintainability

management

measured

microservices

migration

mobile

model-optimization

model-training

multi-objective

network-traffic

parameter-tuning

performance

queries

rebuilding

scaling

services

storage-optimization

strategies

tabs

template

testing

workloads

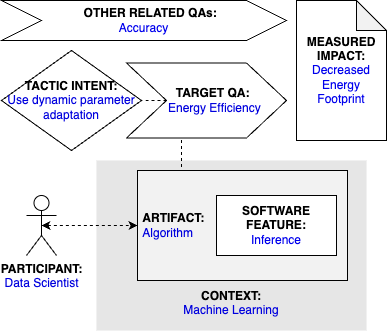

Tactic: Use Dynamic Parameter Adaptation

Tactic sort:

Awesome Tactic

Type: Architectural Tactic

Category: green-ml-enabled-systems

Title

Use Dynamic Parameter Adaptation

Description

Dynamic parameter adaptation means that the hyperparameters of a machine learning model are dynamically adapted based on the input data, instead of determining the exact parameters values in the algorithm. For example, García-Martín et al (2021) used an nmin adaptation method for very fast decision trees. The nmin method allows the algorithm to grow faster in those branches where there is more confidence in creating a split and delaying the split on the less confident branches. This method resulted in decreased energy consumption.

Participant

Data Scientist

Related software artifact

Algorithm

Context

Machine Learning

Software feature

Inference

Tactic intent

Improve energy efficiency by designing parameters that are dynamically adapted based on input data

Target quality attribute

Energy Efficiency

Other related quality attributes

Accuracy

Measured impact

Using nmin method in very fast decision trees resulted in lower energy consumption in 22 out of 29 of the tested datasets, with an average of 7% decrease in energy footprint. Additionally, nmin showed higher accuracy for 55% of the datasets, with an average difference of less than 1%.

Source

Eva García-Martín, Niklas Lavesson, Håkan Grahn, Emiliano Casalicchio, and Veselka Boeva. 2021. Energy-Aware Very Fast Decision Tree. Int. J. Data Sci. Anal. 11, 2 (March 2021), 105–126 (DOI: https://doi.org/10.1007/s41060-021-00246-4)Graphical representation